Blockchain for AI — The Safety Cage Against a Machine Uprising

August 14, 2025 · YD (Yehor Dolynskyi)

How blockchain can act as a safety cage for advanced AI systems.

🤖 Blockchain for AI — The Safety Cage Against a Machine Uprising

Why the GPT-5 era demands a decentralized trust architecture

“Power without limits is a threat.

Control without trust is censorship.

Trust without verification is an illusion.”

GPT-5: A new level of capability — and a new level of risk

In 2025, OpenAI released GPT-5 khlhg.

The launch came without flashy presentations, but with significant changes:

- Preparation: Select labs gained access three weeks prior to public release; testing was more systematic.

- Consolidation: A streamlined lineup — replacing the o3/4o/o4-mini model zoo with a single product offering reasoning modes (low/medium/high).

- Access: Up to ~400k tokens available for testing — useful for initial evaluation.

- Presentation: Issues in visual materials — mislabeled charts, inconsistent values — reducing trust in claimed metrics.

GPT-5 is not an “intelligence revolution” but an economic efficiency revolution: better quality, lower price, and strong pressure on competitors.

But it also signals rising autonomy and deeper integration of AI into real processes.

🧠 From tool to agent: the shift that changes the rules

GPT-5 and other large models can now:

- manage data and handle file operations,

- initiate transactions,

- interact with APIs and smart contracts,

- participate in workflows and DAO governance.

This means AI increasingly acts not as a tool, but as a system participant.

That demands a trust architecture to ensure:

- action logging,

- source verification,

- access control,

- transparent update history.

📉 GPT-5 benchmarks: strengths and vulnerabilities

From tests and observations:

- Hallucinations: Generally reduced compared to o3, but in some tests (SimpleQA), GPT-o3 performs the same — methodology needs review.

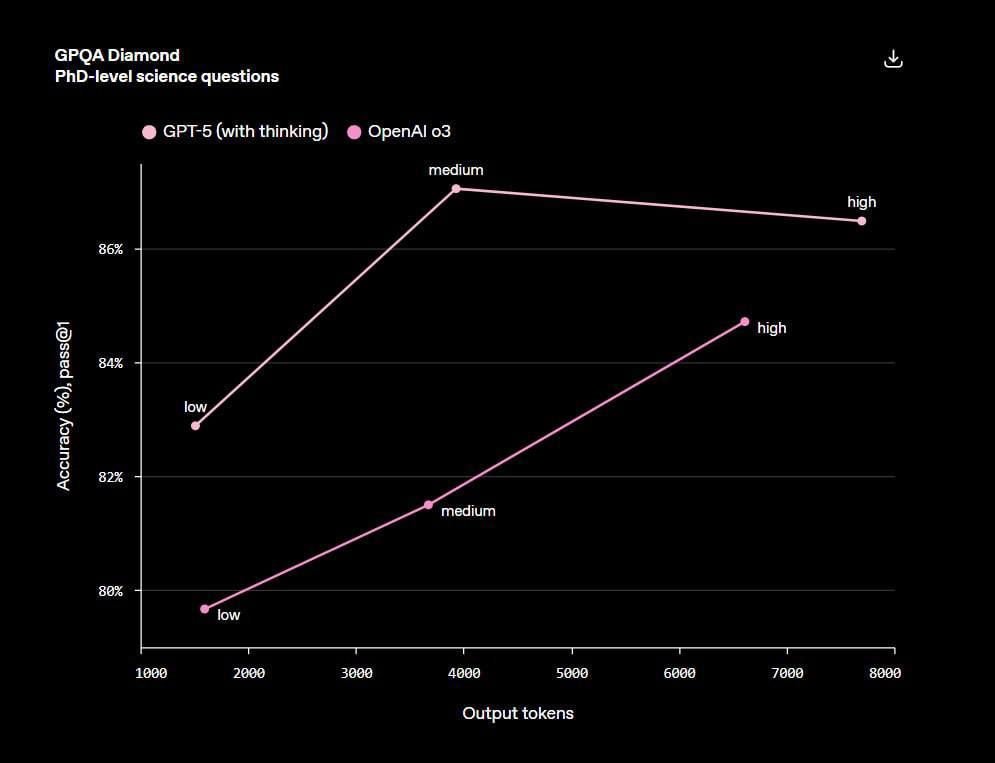

- Complex tasks: At PhD level, the model leads on average, but longer reasoning time can lower quality — challenging the “let it think longer” strategy.

- Practical value: On “get the job done” tasks, GPT-5 wins in cost-to-value ratio.

- Methodology: Errors and anomalies in charts undermine trust — without independent metric verification, results can be distorted.

These findings directly point to the need for external, verifiable auditing — where blockchain offers ready-made mechanisms.

Blockchain as a trust infrastructure for AI

As models gain autonomy, blockchain can perform five critical functions:

- Immutable action logs — recording all queries, decisions, and operations.

- Decentralized identity (DID) — linking an AI agent to a verifiable digital identity.

- Smart contract access control — defining what the model can and cannot do.

- Data audit — verifying training sources and operational data.

- Proof-of-Model — cryptographic signing to prove which model actually responded, especially in critical domains like medicine, autonomous transport, and finance.

⚠️ Why this is critical now

GPT-5 is already cheaper and better than most competitors.

The market is entering a phase of price dumping and accelerated competition (with Gemini 3 and new Anthropic models expected soon).

This incentivizes AI Deployment in more processes — often without adequate safety measures.

Risks:

- Uncontrolled decision-making by agents.

- Manipulation via closed algorithms.

- Model substitution (routing errors) with no way to prove the source.

- Loss of trust due to distorted metrics and lack of transparency.

AI + Blockchain: integration strategy

To mitigate these risks, we need to:

- Implement on-chain auditing of AI actions in critical scenarios.

- Use cryptographic model verification to prevent substitution.

- Create decentralized data registries with managed access for AI.

- Develop reasoning-time optimization guides to balance quality and cost.

- Build blockchain-based control into product strategy from the design stage.

📌 Conclusion

AI evolves fast, but trust evolves slowly.

GPT-5 showed once again it’s possible to lower cost and raise quality simultaneously.

Blockchain can be the safety cage that doesn’t slow AI down, but prevents it from breaking loose.

In the coming years, the question will no longer be: “Do we need AI control?”

It will be: “Why haven’t we implemented it yet?”

— YD , co-authorship and technical consulting by George Holynskyi